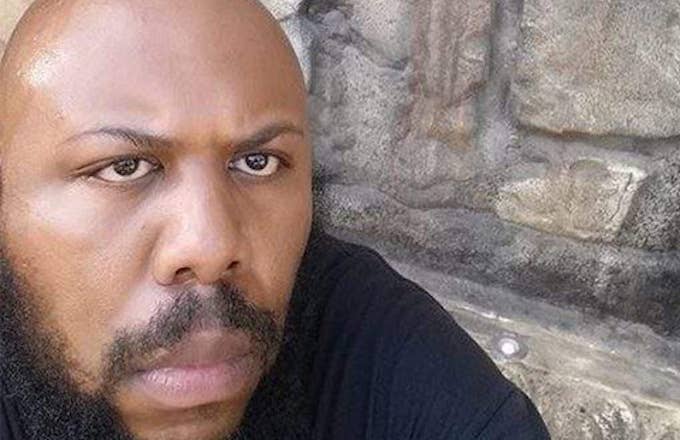

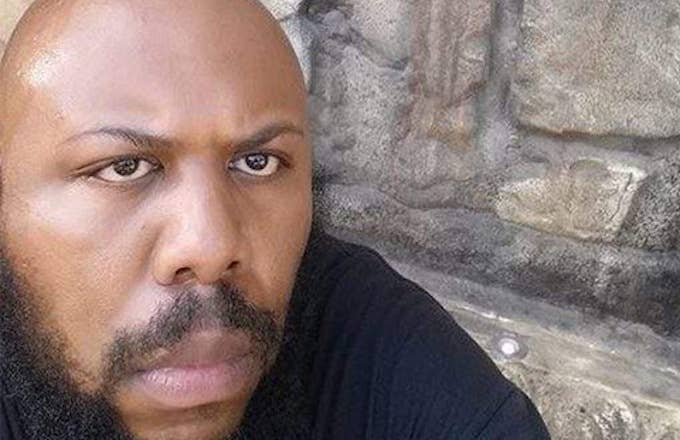

This past Easter Sunday, the last thing I thought I'd see when "Cleveland" was trending on Twitter was a man recording himself murdering another individual. As we all know now, a man who has been identified as Steve Stephens was seen in a video shooting and killing a 74-year-old man by the name of Robert Goodwin, Sr. in broad daylight; Stephens would then go on Facebook Live bragging about killing more people, although there's been no confirmation on the accuracy of those claims.

In the wake of this tragedy (which has forced Cleveland residents to stay indoors in fear of losing their lives), Facebook did release a statement to journalists, saying "This is a horrific crime and we do not allow this kind of content on Facebook. We work hard to keep a safe environment on Facebook, and are in touch with law enforcement in emergencies when there are direct threats to physical safety." In a lengthy statement released after the tragic situation, Facebook also made sure to point out that Stephens didn't murder Robert Goodwin, Sr. on Facebook Live; he uploaded a video of the murder after the fact. Facebook seems to place blame on users failing to quickly report the video—their timeline says there was almost 90 minutes before the video of Goodwin, Sr.'s murder was reported. This was after Stephens had already posted his Facebook Live video confessing to the murder.

The distinction is particularly important in light of the negative backlash the company has received since Facebook Live was introduced to all users roughly one year ago. We've read the horror stories of teen girls and grown men taking their lives on the service while others are being straight-up tortured and sexually assaulted on the service; it's gotten to the point where Facebook had to enact suicide prevention protocols to help alert authorities to possible situations where a user might attempt to take their own life for the world to see. The question is, should Facebook be doing more?

The question is, should Facebook be doing more?

According to a brilliant piece form The Verge on YouTube's history with moderating content, it isn't an easy battle. We're talking a team dedicated to watching every video that's been flagged for a multitude of reasons; people entrusted to sit through the violent, gross, disturbing, and disgusting sides of life, then being tasked to rate how these videos should be flagged. It's a tough job, but someone has to do it. And while the policing of live videos is no doubt a huge undertaking, it's something Facebook has to be meeting head on.

The emphasis Facebook has placed on video only further complicates matters. When Facebook launched Facebook Live, Mark Zuckerberg told Buzzfeed that he "wouldn’t be surprised if you fast-forward five years and most of the content that people see on Facebook and are sharing on a day-to-day basis is video." If you're a creative who uses Facebook as a tool to get your work seen, you know that a video post will move much faster than a simple photo or linked post; it's just the nature of the beast these days. You also know, if you've worked in a certain capacity on Facebook, that uploading someone else's content (be it a clip from a movie or a video with a certain song in it) can be met with a note letting you know to cut the sh*t, with the promise of stronger repercussions in the future if you do so again.

The situation lies in largely uncharted territory. According to Zuckerberg, roughly 1.7 billion people were using Facebook as of July 2016; that's a potentially enormous audience to witness another suicide, murder, or sexual assault during a Facebook Live stream. As of December of 2016, Facebook was reportedly relying more on artificial intelligence to moderate their Live videos, which was a shift from how they used to approach flagged content (with actual humans, ala YouTube in the early days). Facebook's VP of Global Operations Justin Osofsky brought Facebook's experiments in artificial intelligence moderation, saying "Artificial intelligence, for example, plays an important part in this work, helping us prevent the videos from being re-shared in their entirety." Keep in mind, it took a human almost 90 minutes to report a video containing an actual murder; how are we to be certain that Facebook's A.I. will be able to shut down another gruesome video before it goes viral? To be clear: while Facebook's plans aren't an issue, when anyone with an active Facebook account could potentially stream anything from their threesome to a beheading, the question then becomes "Is Facebook doing enough?"

This is one of the toughest problems Facebook might ever have to face, especially with the obvious push Live has been given ahead of other forms of content on the service. Facebook needs to sort itself out, or the future of Live could be in jeopardy.